Cluster Management

Cluster Management is the feature to manage server instancess on a cloud environment. Before 0.9.5, the server instances must be prepared and the IP addresses of the servers need to be defined in kupboard.yaml. However, after 0.9.5, server instances are automatically created by kupboard based on the cluster structure defined in kupboard.yaml.

You can also use commands to manage instances such as create, delete, start, and stop. These commands would be very useful when you want to add or remove a node, or change an instance type as different situations.

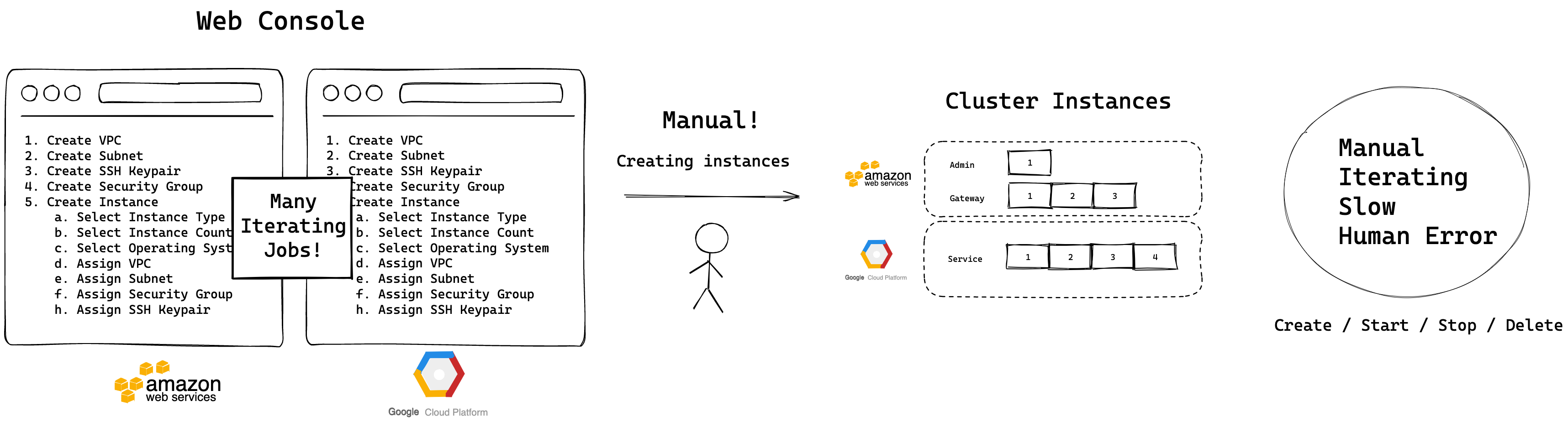

As shown below, Cluster Management automatically can handle many repetitive jobs that should be done manually.

Manual Cluster Management

Automatic Cluster Management

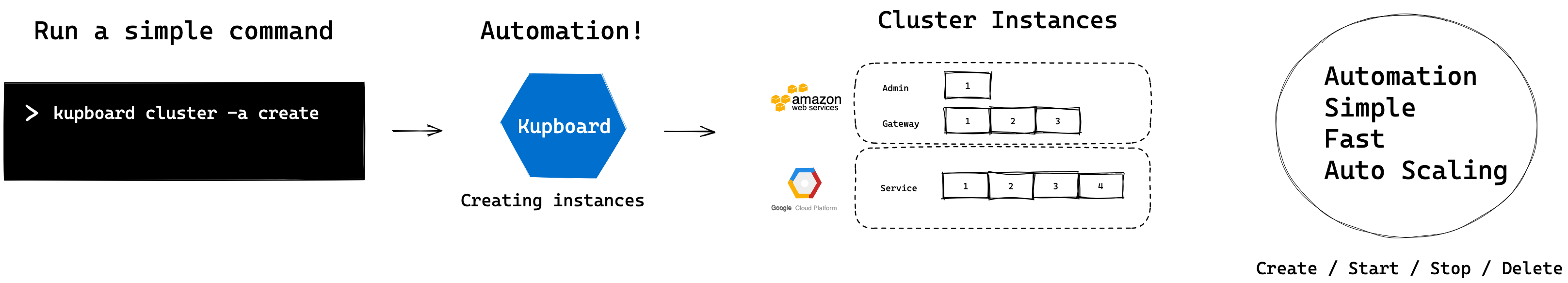

Cluster#

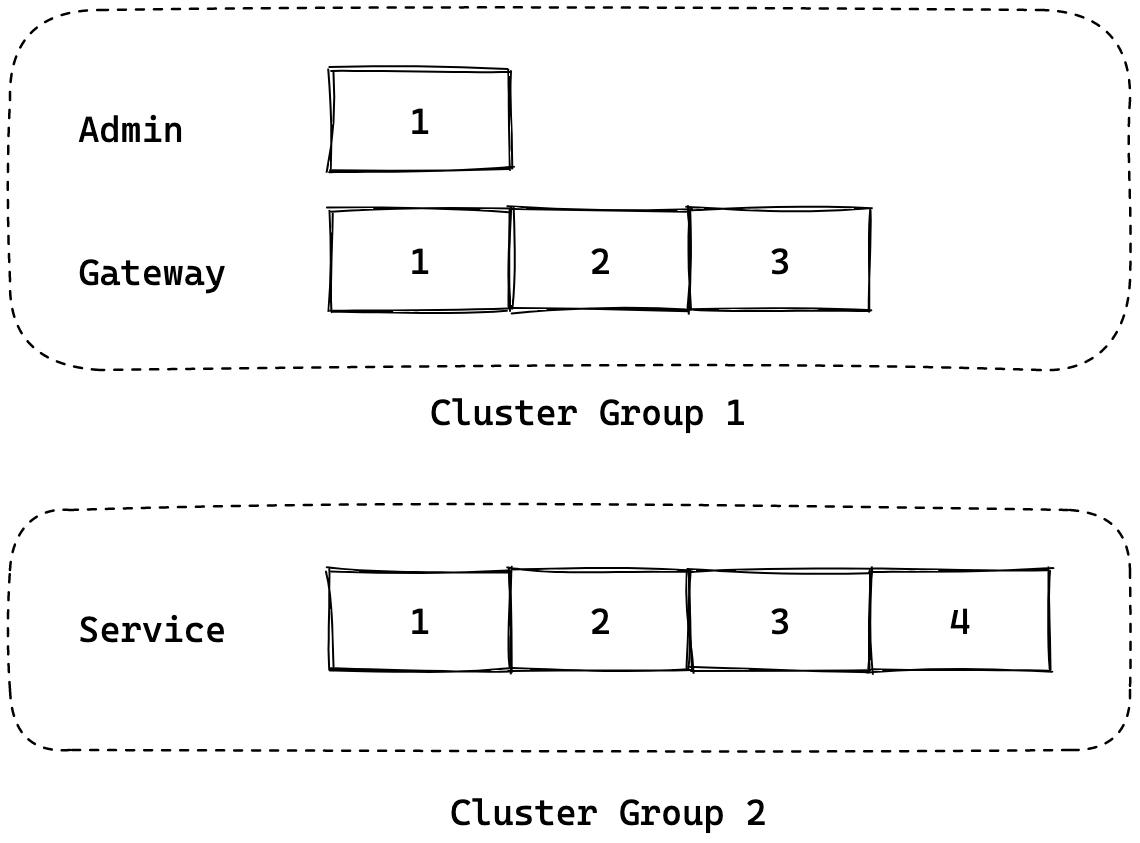

If you want to manage servers manually, you can build a service environment by defining the IP addresses of the servers in the cluster in kupboard.yaml. If the cluster contains 1 admin server, 3 gateway servers, and 4 service servers as shown above, the cluster definition would be as follows:

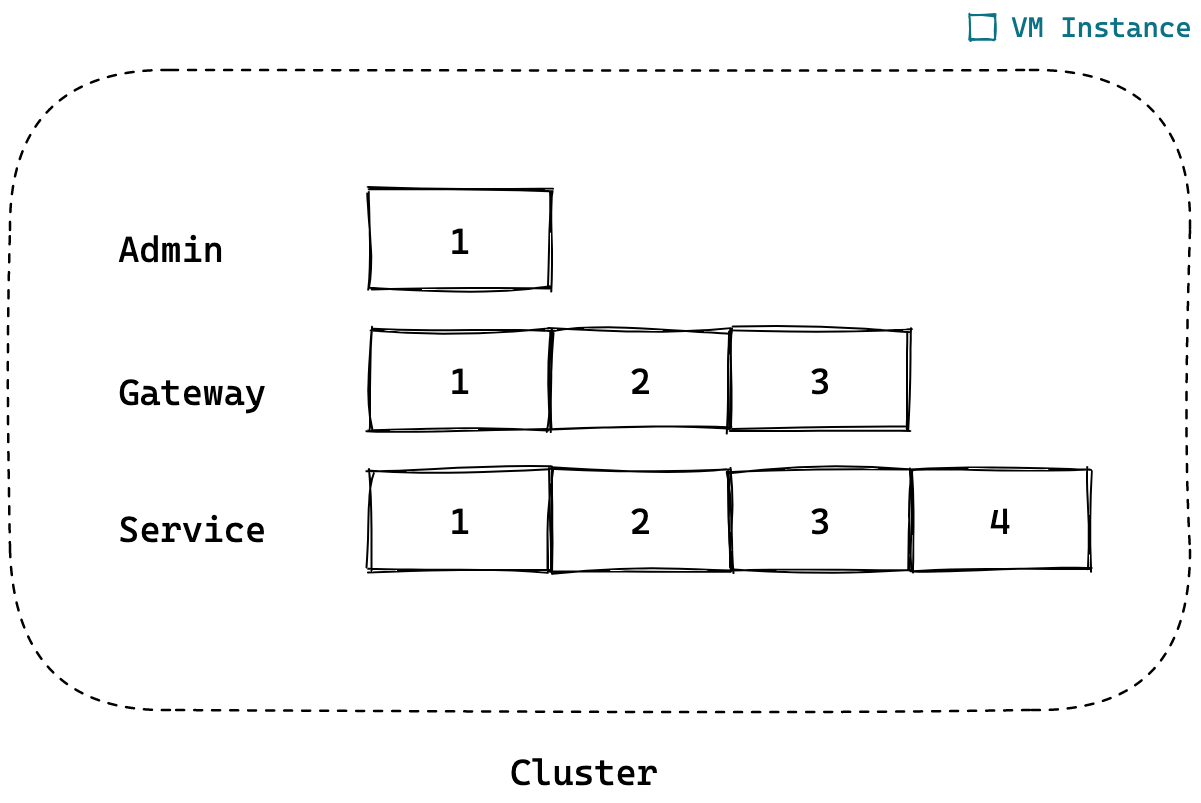

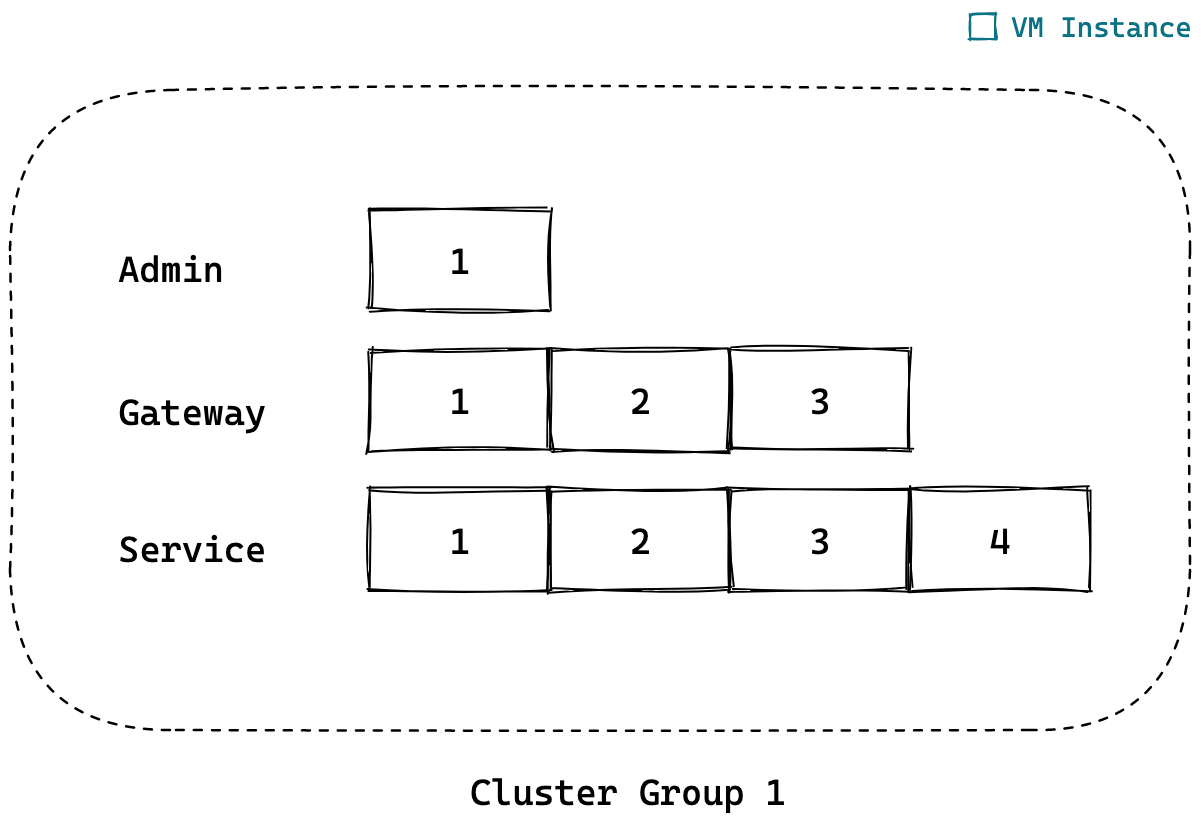

Cluster Group#

If you use Cluster Group, you only need to define the names of the clusters and the number of servers for clusters instead of all IP addresses of servers as follows. Based on this cluster structure, kupboard automatically creates server instances. For more detail, see Cluster Commands.

However, For kupboard to control server instances the credential and information of a cloud provider is required and they can be defined as below.

If you want to divide a cluster into multi groups, you can define groups as follows. This is related to Multi Cloud, please see Multi Cloud for more information

Cluster Command#

- Create or delete all cluster groups

- Create or delete a cluster group

- Create, start, stop or delete an instance

- Update local cluster information from a cloud provider

- Show current cluster information

Providers#

The information required to manage instances depends on a cloud provider. Below are the examples for AWS, MS Azure and Google Cloud Platform.

note

Currently Cluster Group supports AWS, MS Azure and Google Cloud Platform.

AWS#

Google Cloud Platform#

gcp-key-file.jsonA key file of your google project and it must be located indata/certs.

Azure#

Setup#

When you create a cluster by using the cluster command, the default user with a root permission is automatically created. The username is kupboard for GCP and Azure and, ubuntu for AWS.